A delta or incremental load is a process that extracts newly added or changed records from a source system before loading them into a target system. This is generally a good strategy for integration scenarios since repeatedly reading and writing the entire dataset during each run is time-consuming, which hinders the load process and overall performance. Also, delta loads can reduce the payload volume, thereby streamlining your execution flow. Few services such as Dynamics CRM/Dataverse, Dynamics F&O, etc., offer their own in-built change-capturing features that could be utilized to build a robust incremental data load process. However, for other APIs and systems without such capabilities, a custom incremental load strategy would need to be implemented. Most services or APIs provide filtering options through a query language or query string parameters in the request URL, allowing users to apply conditional filters based on a timestamp field such as "last modified datetime", "since last change datetime", or "changed date", to read records that have been modified after a certain date and time. Equating such filter parameters against the last run/execution time would ensure that the Source component in your SSIS data flow would read only those new records after the last run/execution time. The basic logic here would be to have a way to identify and store the date and time of the last execution in an integration control table, which can then be assigned to a variable and used dynamically in the Source filter. Note that there are other ways to perform change capture by using KingswaySoft components, such as using the Diff Detector or Premium Hash components, both included in our SSIS Productivity Pack. The focus of this blog post is to perform an incremental/delta load based on a timestamp field as we just discussed.

Having said that, the purpose of this blog post is to demonstrate a simple, yet generic strategy that can be applied to almost any of your data flows by utilizing the necessary KingswaySoft components. In this demonstration, we will be using the following components:

- Premium SQL Server Command Task

- NAV/BC Source component (A source as an example, but you could substitute it to use any source components applicable)

Design Overview

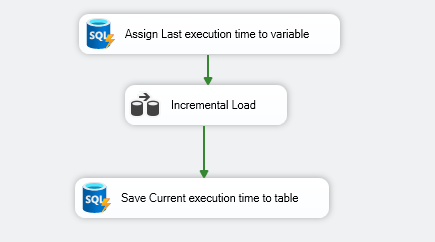

Below we have a general design overview from the control flow view. A prerequisite would be to have a database table setup in your SQL Server environment that contains two columns - One for the process name, and the other for storing the last execution date time. The process contains the following 3 SSIS tasks. The first one is a Premium SQL Server Command Task (Assign Last execution time to variable in the design) will read whatever value is currently available in the table and assign it to a variable. This variable will be used in the second data flow task (Incremental Load) as a filter for the Source component(s). This is where your main data pull will happen. The KingswaySoft Source components can be easily parameterized to accept the variable instead of a static value. Once the main incremental load is done, the third task (Save Current execution time to table) will save the new timestamp value in the integration control table so that the new value will be used for subsequent delta loads in the future.

Let's look at each data flow in detail.

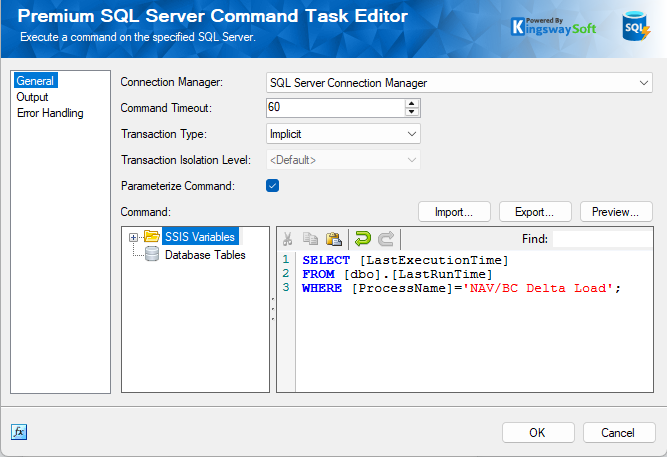

Assign Last Execution Time to Variable

Here, we use a Premium SQL Server Command Task to read from the table that has the last execution time. In our example, the table name is LastRunTime, and it has two columns - ProcessName (to be used as a key for Updates) and LastExecutionTime (which stores the datetime for the last execution). We use a SELECT statement to get the LastExecutionTime for the ProcessName "NAV/BC Delta Load" as this would be the name we have assigned for this current process. It is important to store and filter LastExecutionTimes based on process names to be able to use the same table for multiple delta loads.

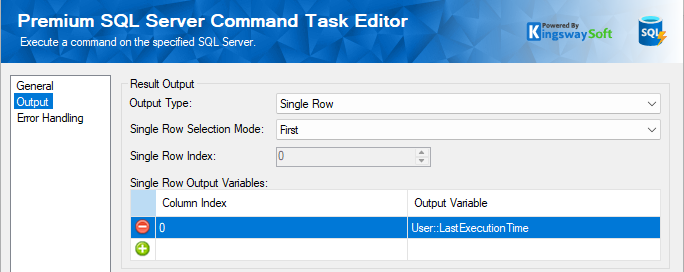

In the Output page, we can choose the Output type, and assign the single value we read from the table to the user variable @[User::LastExecutionTime].

Next, let's look at the second data flow task.

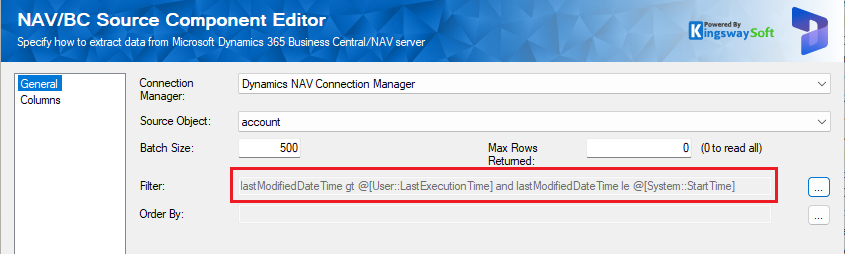

Incremental Load

Here, we use the Dynamics NAV/BC Source component as an example. It includes a datetime filter to retrieve the records that were modified after the last execution time. As you can see below, the LastModifiedDateTime in the NAV/BC object is equated against the variable to which we had assigned the last execution time value from the table in our first task. Now, it is important to provide a cut-off datetime as well, as this would mean controlling the incremental data pull between the last execution time and the current start time of the process. This is done in order to avoid any inconsistencies when it comes to multiple processes and runs, and for that, we have added a "less than" or "equal to" filter against the current process start time.

Also, it is worth noting that when timezone differences come into play, you may need to perform time zone conversions to accommodate for accurate delta pulls. KingswaySoft offers the Time Zone Conversion transformation component that can be used in such scenarios; you may need to slightly tweak the design to use data flows and derived columns in those cases to save and assign correct datetime values. Using UTC format would be another case where such adjustments are required. In our demonstration, we have our user's time zone as the base across the process and hence no transformation is required.

Note: To assign a filter for this component specifically, we click on the ellipsis button and use the query builder. This would differ based on the Source you have chosen.

With the source component configured above, what we receive from the source component will only contain the changes that have happened between the incremental load processes, which can be processed by writing to a target system using a destination component. Ideally, the destination component should support an Upsert operation to make the process easy.

It is worth mentioning, the delta load is using the System::StartTime as the cut off time. This is important if your data load process involves multiple objects or entities, as it ensures consistent data retrieval.

Now, let's move on to the third task.

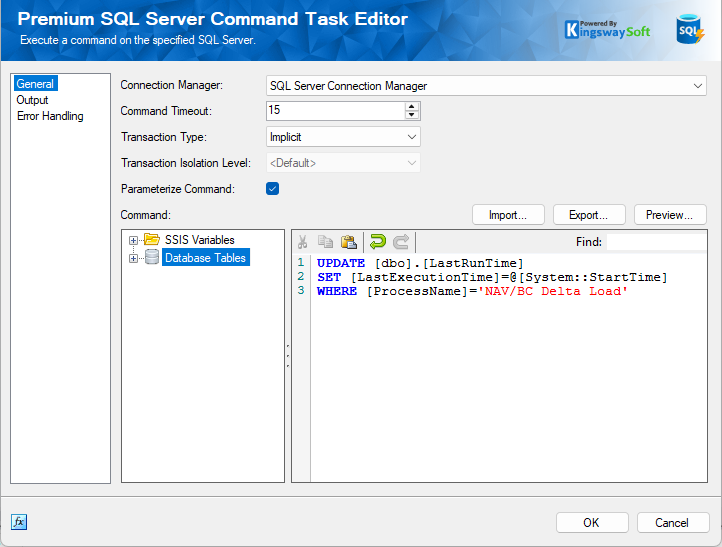

Save Current Execution Time to Table

After the delta load is finished, we need to save the cut-off time back to the integration control table. In doing so, we use another Premium SQL Server Command Task, in which we utilize an UPDATE statement to update the LastExecutionTime column to the current process start time using the value from System::StartTime variable. With this configuration in place, this value will be used as the conditional filter's base time to achieve the next incremental read. Note that we are using the ProcessName value in the WHERE clause to make sure that we save the value to the associated process, preventing it from being mixed up with other data load processes.

Once the above three tasks are set up, you can execute the package. In the initial run, the table will be empty, and you can either leave it empty or provide a minimum datetime value for the column, ensuring that all records from the Source system are pulled. Once the package has completed its execution, the "StartTime" of the current execution will be written to the table. This will then be picked up in the first data flow task during the next run, and assigned to the variable to be used in the Source system filtering.

Conclusion

By following a logic such as the above, you can easily achieve incremental/delta loads from literally any source system. The example provided in this blog post showcases the NAV/BC Source, which can be substituted with any service that supports filtering. Note that this can even be applied to cases that require local filtering, conditional splits, or any other such designs.

We hope this has been helpful!